Taking a closer look at LHC

CERN operates some of the most complex scientific machinery ever built, relying on intricate control systems and generating petabyte upon petabyte of research data. Operating this equipment and performing analysis on the data gathered are both intensive tasks. Therefore, CERN is increasingly looking to the broad domain of artificial-intelligence (AI) research to address some of the challenges encountered in dealing with data, particle beam handling, and in the up keep of its facilities.

The modern domain of artificial intelligence came into existence around the same time that CERN did, in the mid-’50s. It has different meanings in different contexts, and CERN is mainly interested in task-oriented, so-called restricted AI, rather than general AI involving aspects such as independent problem-solving, or even artificial consciousness. Particle physicists were among the first groups to use AI techniques in their work, adopting Machine Learning (ML) as far back as 1990. Beyond ML, physicists at CERN are also interested in the use of Deep Learning to analyse the data deluge from the LHC.

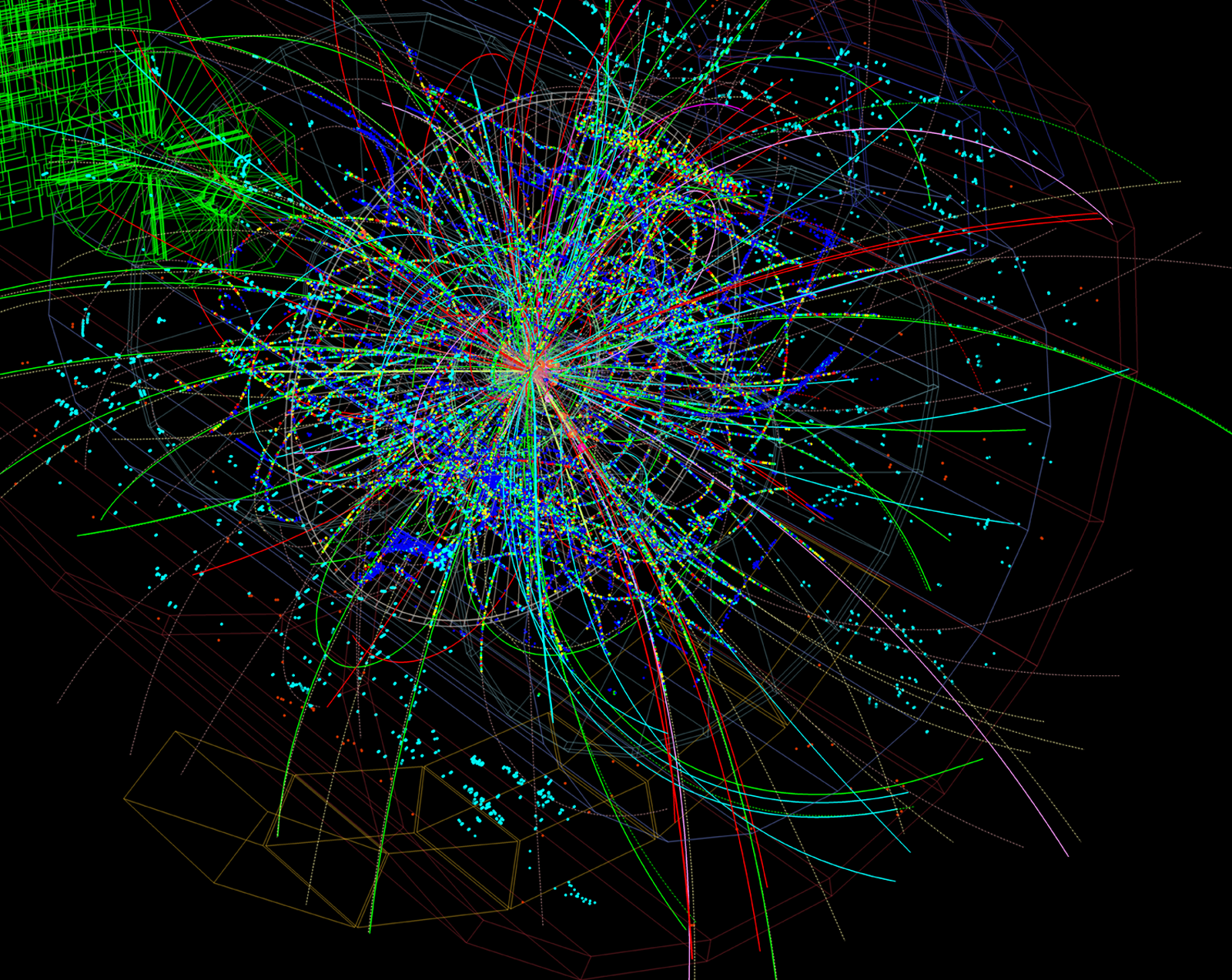

As we have already mentioned, when particles collide in accelerators, numerous cascades of secondary particles are produced. The electronics that process the incoming signals from the detectors then only have a fraction of a second to assess whether an event is of sufficient interest to be stored for later analysis. In the near future, this demanding task could be taken over by algorithms based on AI.

In fact, the LHC’s four major collaborations ALICE, ATLAS, CMS and LHCb have formed the Inter-experimental Machine Learning (IML) Working Group to follow developing trends in ML. Researchers are also collaborating with the wider data-science community to organise workshops to train the next generation of scientists in the use of these tools, and to produce original research in Deep Learning. ROOT, the software program developed by CERN and used by physicists around the world for analysing their data, also comes with machine-learning libraries.

For exemple, although machine learning (ML) techniques were used in particle physics since the 1980’s, and in CMS since its birth, these have traditionally relied on the highly processed inputs produced by reconstruction algorithms rather than on the more basic “raw” data provided by the particle detectors. This data analysis paradigm was instrumental in several CMS physics analyses, including some interesting discoveries, but was not good enough to overcome the challenges posed by many exotic decays. In fact, the limiting factor was not so much the performance of the ML algorithms themselves but rather of the algorithms used to turn the raw detector data into basic physics observables.

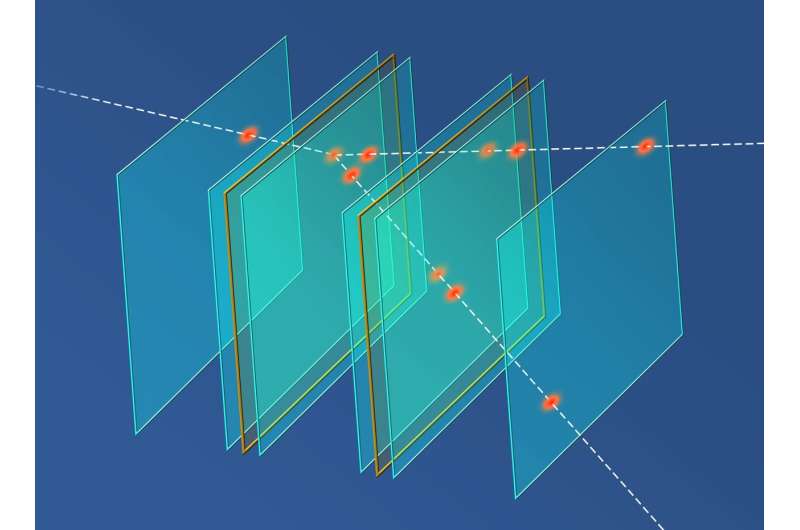

Enter modern artificial intelligence (AI) and deep learning. Thanks to this newer breed of ML algorithms, it is now possible to bypass the conventional reconstruction step and directly process the detector data. Applying AI to directly extract particle information from low-level detector data (a new approach often referred to as end-to-end particle reconstruction) promises to unlock potential information pathways that could solve some of the biggest challenges we face when searching for exotic decays.

Using Machine Learning techniques to search for exotic-looking collisions.

One of the main aims of the LHC experiments is to search for hints of new particles, which could explain many unsolved mysteries in physics. Often, searches for new physics are designed to look for one specific type of new particle at a time, relying on theoretical predictions. But what about the search for unforeseen and unanticipated particles?

Going through the billions of collisions taking place in the LHC experiments without knowing exactly what to look for would be a colossal task for physicists. That's why, instead of sifting through the data and looking for anomalies, the ATLAS and CMS collaborations are letting artificial intelligence (AI) do the work.

Researchers from these two Collaborations use various strategies to train AI algorithms to search for jets. By studying the shape of their complex energy signatures, scientists can determine which particle created the jet.

Production in pp collisions of a dijet resonance, A, which decays to two resonances B and C, that in turn each decay to a jet with anomalous substructure arising from multiple sub-jets.

Another method is to ask the AI algorithm to examine the whole collision and look for abnormal characteristics in the different particles detected. These abnormal characteristics may indicate the presence of new particles.

Another approach is for the physicists to create simulated examples of possible new signals, then ask the AI to identify collisions in the real data that are different from the normal jets, but that resemble the simulation.

View more in:

https://atlas.cern/Updates/Briefing/Anomaly-Detection

https://cms-results.web.cern.ch/cms-results/public-results/preliminary-results/EXO-22-026/index.html

Another example is the research presented in Computer Science by scientists from the Institute of Nuclear Physics of the Polish Academy of Sciences (IFJ PAN) in Cracow (Poland) that suggests that tools built using artificial intelligence (AI) could be an effective alternative to current methods for the rapid reconstruction of particle tracks. Their debut could occur in the next two to three years, probably in the MUonE experiment at CERN which supports the search for new physics.

|

AUTHORS Xabier Cid Vidal, PhD in experimental Particle Physics for Santiago University (USC). Research Fellow in experimental Particle Physics at CERN from January 2013 to Decembre 2015. He was until 2022 linked to the Department of Particle Physics of the USC as a "Juan de La Cierva", "Ramon y Cajal" fellow (Spanish Postdoctoral Senior Grants), and Associate Professor. Since 2023 is Senior Lecturer in that Department.(ORCID). Ramon Cid Manzano, until his retirement in 2020 was secondary school Physics Teacher at IES de SAR (Santiago - Spain), and part-time Lecturer (Profesor Asociado) in Faculty of Education at the University of Santiago (Spain). He has a Degree in Physics and a Degree in Chemistry, and he is PhD for Santiago University (USC) (ORCID). |

CERN CERN Experimental Physics Department CERN and the Environment |

LHC |

IMPORTANT NOTICE

For the bibliography used when writing this Section please go to the References Section

© Xabier Cid Vidal & Ramon Cid - rcid@lhc-closer.es | SANTIAGO (SPAIN) |